In my mother tongue, when one does not know/want to share reason for his actions he says “Lebo medved” (= due to the bear). That brings the actual bears off balance, because they also do not have a clue why you would do that. Going through several elections in past few months, I realize that elections are “Lebo Hadoop” = a bit like Hadoop. Don’t you get it why? Well, then let both you and yellow elephants understand why:

Hadoop and other technologies of distributed computing and data analytics are still less common in our society than the progress would expect them to be. Upon explaining the concept to managers I quite commonly have to reason that implementing Hadoop (which they heard of at any business conference lately) is not switching Fords for Volkswagens or like migration from MS SQL to Oracle. To really embrace the distributed technologies is rather massive change to company business processes. As this stage I usually run short of managerial understanding and start to hear statements like “After all, Hadoop is piece of software, so it can be reinstalled to take over the actual SW, isn’t it?” You roll your eyes and start to think if it is actually worth investing further clarification effort, when the chances to succeed are so small. And exactly for these situations comes handy following explanation:

In most of the European countries elections are organized through means of voting districts. Every voter is registered to exactly one polling station, usually the closest to his/her living address. That helps to make sure that the voter does not have to travel too much to polling station and thus allowing to minimize the time needed for single voter to cast his/her vote. Immediately as the election time is over, the local polling station (through hands of the local electoral committee) starts to count the votes casted for different candidates. After they finish they work, they create election protocol summarizing all achieved results of vote in given polling station. This protocol than travels to county electoral committee to sum up all of their polling stations and then through means of regional and national electoral committee to sum the entire election result. We feel this process to be, somewhat, natural, as we got used to that over the decades. But let’s imagine it would be all done differently …

Imagine the whole country would need to vote at the very same place. That would mean some people will travel very long distance (what certainly would influence participation in elections). What is more, imagine how big would have to the polling station to be, to actually host that millions of voters. And how long the queue would be to actually cast the vote. Polling station that big would have to be a dedicated building built just for that purpose (that is of no other use outside the elections). This way organized elections would be only possible with flawless voter register. Any small discrepancies in recognizing or registering the voter would clutter the queue waiting to give their vote. This election would be ultimate hell for local electoral committee. They would need, literally, weeks if not months before single committee counts and verifies several millions of voting ballots. And imagine that some of the electoral committee members get ill or go on strike. As there is only one electoral committee in whole country there is no replacement at hand. By this moment, you are probably getting irritated and think “Who on Earth would go such a down right insanity?!“

Well, you might be surprised to find out this is exactly the way how our legacy relational SQL databases operate. They try to store all data into the same table(s). As a result, the computer that can handle that much information, has to possess immense capacity that is both (as single polling station in country) too robust and too expensive. What is more, these cannot be the regular PCs honed by normal users, these have to be dedicated machines (usually not utilized outside of database tasks). All data points have to be written sequentially what automatically creates long queues if data load is massive. God save you, if single line of the intended data input is incorrect. Writting process is then aborted and the rest of the data still waiting to be injected. If the main database corrupts or some calculation got stuck, the whole business process freezes. Any slightly more complicated count takes enormous time. As more and more data flow in the process, it only gets worse at the time.

After seeing all this, Hadoop has said: Enough! It’s operation and data storage are similar to way we organize our elections. In Hadoop the total data is split into great number of smaller chunks (polling stations) that hosts limited sub-group of (voter) records. Data are stored close by their origin (same way the polling station is close to your place) and thus the reading and writing the data is much faster. When the (election result) sum is called, several (polling) places work in parallel to count the votes, not single election committee calculating everything. After calculation is completed on individual places (Hadoop calls then “nodes”), the nodes pass the result to higher level of aggregation, where results from nodes is summed to total result. While the node are calculating rather small and simple amounts, common PCs can serve as host for the nodes, no single supercomputer (one mega election station) is needed. These common PCs can have other use outside the Hadoop calculation (same way polling stations turn back to schools and community centers after elections are over). The analogy is real, as the Hadoop nodes have similar autonomy as the local electoral committees have in voting process. Same resources available to node are both steering the read/write process as they are taking part in calculations (similarly to local election committee members both casting their own vote as well as taking care of counting all votes casted). And finally if one node (local polling station) fails, another neighbouring node can take over the task. This way, the system is much more resilient to failure or overload. (imagine it to be the case of all voters have portal electoral ID allowing them to vote in another polling station if they home one went on fire)

The Hadoop geeks can object that the Hadoop-Elections analogy is not exact in two points: In order to prevent data loss, Hadoop intentionally stores the copies of the same data chunk to more than one node. If this was to be replicated in the voting process, people would have to cast the same vote into several polling stations “just in case one of them goes on fire”, which is not really wanted phenomena of proper elections (leaving aside the fact that there has been one legendary case of this actually happening in Slovakia). Secondly, there is not fixed, appointed state election committee overseeing the work of the local election committees. In Hadoop any local election committee can take role of the national one. However, leaving these two tiny details aside, the Hadoop-Election analogy fits perfectly.

So next time you need to explain to your boss (or somebody else) how Hadoop really works, remind them of elections. Or simply say “Lebo Medved”…

Step 3: Look for real Data Science leader in real Data Science team.

Step 3: Look for real Data Science leader in real Data Science team.

to create robots compatible with human kind, we should provide them with comparable education as we receive as humans. Some disillusion rises from opponents reminding that we hope robots to be better/fairer than the bar we, humans, se for them. While, let’s be sincere, our education system still produces abundance of cheaters, violence or human intolerance. Thus, striving for higher moral standards in robots does not seem to unreasonable request in that context. Be it reality or just our dream, these are three ways we now try to educate machines:

to create robots compatible with human kind, we should provide them with comparable education as we receive as humans. Some disillusion rises from opponents reminding that we hope robots to be better/fairer than the bar we, humans, se for them. While, let’s be sincere, our education system still produces abundance of cheaters, violence or human intolerance. Thus, striving for higher moral standards in robots does not seem to unreasonable request in that context. Be it reality or just our dream, these are three ways we now try to educate machines:

Quite different approach has been selected by

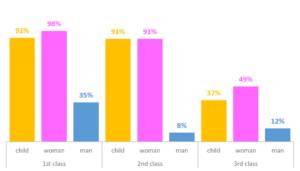

Quite different approach has been selected by  The third approach relies on moral standards development that we, humans, receive in the early childhood.

The third approach relies on moral standards development that we, humans, receive in the early childhood.